Supreme Court Must Preserve Section 230’s Strengths While Clarifying Platforms' Potential Liability for Causing Harm

WASHINGTON — On Monday, the U.S. Supreme Court granted certiorari and agreed to hear an appeal of Gonzalez v. Google LLC, a 2021 Ninth Circuit Court of Appeals decision implicating Section 230 of the Communications Act.

The case turns on whether Google, Facebook, Twitter and other “interactive computer services” — which Section 230 typically protects from liability for content that users post — might be liable for the platforms’ own decisions and actions to amplify or recommend that content.

Both the Ninth Circuit appellate court and the lower federal court that first heard this case held that Section 230 barred such claims, which turn in this instance on Google’s alleged recommendations of terrorist-recruitment videos prior to the plaintiff’s daughter’s death during the 2015 ISIS attacks in Paris. The Ninth Circuit’s consolidated case also ruled on claims against other social-media companies for alleged similar activities prior to similarly deadly attacks that left the victims’ families understandably devastated and searching for justice.

As explained in congressional testimony in 2021 and elsewhere, Free Press supports retention of Section 230’s core protections alongside discussion of careful amendments to the law or further court decisions that clarify platforms’ liability for their own knowingly harmful actions.

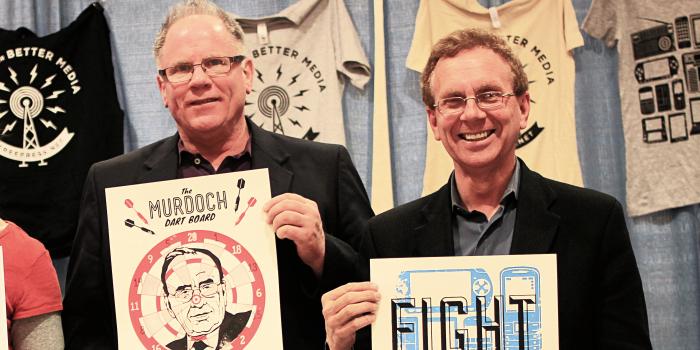

Matt Wood, Free Press vice president of policy and general counsel, said:

“Section 230 is a foundational and necessary law. It benefits not just tech companies large and small, but the hundreds of millions of people who use their services daily. It’s hard to overstate the complexity and importance of cases like the one the Supreme Court has agreed to hear. Section 230 balances the understandable desire and need for accountability for heinous acts like the deadly attacks perpetrated in these cases against the need to protect free expression, open dialogue and all manner of beneficial activities on the modern internet.

“Section 230 lowers barriers to people sharing their own content online without the pre-clearance platforms would demand if they could be liable for everything those people say and do. This law protects platforms from being sued as publishers of other parties’ information, yet it also permits and encourages these companies to make content-moderation decisions while retaining that protection from liability.

“Section 230 thus encourages the open exchange of ideas, but also takedowns of hateful and harmful material. Without those paired protections, we’d risk losing moderation and removal of the very same kinds of videos at issue in this case. We’d risk chilling online expression too, since not all plaintiffs suing to remove ideas they don’t like would be proceeding in good faith as the victims’ families here clearly did. Both risks are especially significant for Black and Brown communities, LGBTQIA+ people, immigrants, religious minorities, dissidents, and all people and ideas targeted for suppression or harassment by powerful forces.

“Section 230 allows injured parties to hold platforms liable for those platforms’ own conduct, as distinct from the content they merely host and distribute for users. But some courts have interpreted the law more broadly and prevented any such test of platforms’ liability for their own actions. Free Press believes platforms could and often should be liable when they knowingly amplify and monetize harmful content by continuing to distribute it even after they’re on notice of the actionable harms traced to those distribution decisions.

“Yet using this case to effectively repeal or drastically alter Section 230 would be a terrible idea. Platforms that filter, amplify or make any content recommendations at all should not automatically be subject to suit for all content they allow to remain up. That kind of on/off switch for the liability protections in the law would encourage two bad results: either forcing platforms to leave harmful materials untouched and free to circulate, or requiring them to take down far more user-generated political and social commentary than they already do.

“It’s important to remember that allowing suits to go forward for the behavior challenged in this case wouldn’t automatically make the platforms liable. It would merely allow plaintiffs to proceed on the difficult path of proving in court that a platform knowingly provided substantial assistance to a terrorist organization. But it’s also important to remember that even the prospect of facing such suits could have a chilling effect, and that determining in Congress or in court what constitutes a terrorist organization or assistance to one could also be a politically charged exercise.”

In January 2022, Free Press joined the American Civil Liberties Union, the Lawyers’ Committee for Civil Rights Under Law and the National Fair Housing Alliance in a Ninth Circuit amicus brief arguing that Facebook violates civil-rights protections by offering advertising tools that discriminate based on users’ race, gender and other protected characteristics.